Dataflow Analysis to Semi-Automatically Find Chainer Bugs

Preface

As a system software researcher working for an (you know, one of many) "artificial intelligence research center", I use Chainer to explore what kind of system characteristics/supports the real AI applications need. Chainer is really good for this purpose because the framework itself is really simple so it is easy to hack as you wish.

Although the framework is intensively maintained, I sometimes happen to find bugs, especially when I use it in a bit different usage than normally done. This post explains a tiny tiny idea I came up with to (kind of) semi-automatically find a certain type of bugs of Chainer.

The Idea

So the idea is "the forward and the backward propagations for the same calculation are supposed to do similar things, especially for preparation".

For example, both forward and backward of the linear function converts the first input into a matrix and assign the result into x (x = _as_mat(inputs[0])), and assign the second input into W (W = inputs[1]).

Given this idea, I extracted all assignments for each variable, and compare the extrancted assignments between forward and backward functions.

If there is a variable with the same name in forward and backward but with different assignments, it might be a potential bug.

In the linear example, both x and W have the same assignments in forward and backward.

Bugs It Found

Let's see how it works. Here is the code I wrote to extract the assignments and compare them.

You should set the names of forward and backward functions by hand (l13 and l15), depending on whether they are vanilla forward/backward, forward_cpu/backward_cpu, or forward_gpu/backward_gpu.

Clone the Chainer repository and revert it to a point before the bug I found in this method has been fixed.

After that, apply my script to chainer/chainer/functions/connection/deconvolution_2d.py.

$ git clone chainer && cd chainer $ git checkout e6a7ec62773f0df0e3e0 $ ~/src/chainer_dataflow/chainer_dataflow.py chainer/functions/connection/deconvolution_2d.py different data flow! ( b ) forward: 111 b = inputs[2] if len(inputs) == 3 else None 137 b = cuda.cupy.ascontiguousarray(b) backward: 228 b = inputs[2] if len(inputs) == 3 else None -------------------------------------------------- different data flow! ( kh ) forward: 123 kh, kw = W.shape[2:] backward: 242 _, out_channels, kh, kw = W.shape -------------------------------------------------- different data flow! ( kw ) forward: 123 kh, kw = W.shape[2:] backward: 242 _, out_channels, kh, kw = W.shape -------------------------------------------------- different data flow! ( c ) forward: 125 c = W.shape[1] # out_c backward: 243 c, h, w = gy.shape[1:] -------------------------------------------------- different data flow! ( algo ) forward: 160 algo = libcudnn.getConvolutionBackwardDataAlgorithm( 165 algo = cuda.cupy.cuda.cudnn.CUDNN_CONVOLUTION_BWD_DATA_ALGO_1 # NOQA backward: 258 algo = libcudnn.getConvolutionForwardAlgorithm( 283 algo = libcudnn.getConvolutionBackwardFilterAlgorithm( 288 algo = cuda.cupy.cuda.cudnn.CUDNN_CONVOLUTION_BWD_FILTER_ALGO_1 # NOQA --------------------------------------------------

There are many outputs, but (unfortunately) only the first one (b) is relavant here.

The output shows that, in forward, b is assigned from inputs[2] in line 111 and converted into c-contiguous in line 137.

However in backward, b is assigned in line 228 and that's it with no conversion into c-contiguous, which is a bug (#2666).

In the same way, it can also find a smilar bug such as #2582 (do not forget to set l13 and l15 of chainer_dataflow.py into forward and backward, instead of forward_gpu and backward_gpu). This bug fix actually is the one that motivated me to try this idea.

Here's another example:

$ git checkout e6a7ec62773f0df0 # same commit as the above $ ~/chainer_dataflow.py chainer/functions/connection/dilated_convolution_2d.py ... ... -------------------------------------------------- different data flow! ( x_desc ) forward: 133 x_desc = cudnn.create_tensor_descriptor(xji) backward: 247 x_desc = cudnn.create_tensor_descriptor(x) --------------------------------------------------

In this case x_desc are assigned with tensor descriptors created from different tensors, which was actually not a critical bug but a naming inconsisntecy (#2665).

Limitations and Potantial Extensions

Because both the idea and the script are very simple, of cource there are many limitations. The aim of this post is not like "a research paper that claims super novelty", but to tell the idea to other people with a hope that they may come up with a more clever idea besed on mine, which will be beneficial to the whole community. One obvisous limitation is that it yields a loooot of false positives. It might be useful to defined a threshold of "relevant difference level".

A possible way to extend the idea I have in mind, is to compare the code among forward_cpu and foward_gpu, but and among foward and backward.

This is based on a though that some preparation code must be shared both in the cpu mode and the gpu mode.

For example, #2589 fixed a missing assertion in the gpu mode code that already existed in the cpu mode code.

Debian 9 uses Kernel 4.9 that Supports PEBS Better

Preface

In the previous post I installed Debian 8 (jessie) into Thinkpad X260, but I actually changed my mind and re-installed Debian 9 (stretch), because it supports the wifi equipped in Thinkpad X260. A good thing is Debian 9 is already freezed so I can expect there're only a few critical bugs remained (well there're actually one to two hundreds of them as of today, but it's relatively a small number given that it has over 40K packages).

One big difference between Debian 9 and 8 is the kernel versions they use (4.9 vs 3.16), and especially the support for Intel PEBS (Precise Event Based Sampling) is way better (or I have to say way more proper) in kernel 4.9. This post explains what PEBS is a bit and how its support gets better if you use kernel 4.9.

Precise Event Based Sampling (PEBS)

PEBS is an extension of the performance counters, which is a mechanism to measure various hardware events such as number of cache misses, number of branch prediction misses, and many many others. If you're not familiar with the performance counters, please refer another site like this.

PEBS can be used from linux perf tool by specifying pp suffix after the counter name, such as:

# specify a counter by the name $ perf record -e cpu/mem-loads/pp -- workload

# specify a counter by its number $ perf record -e r20D1:pp -- workload

An advantage of PEBS against the normal performance counters is that, as the name suggests, PEBS is more precise because it's all hardware-based.

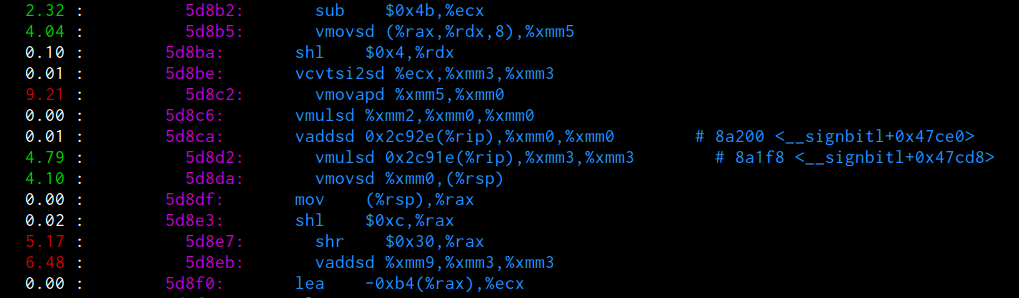

For example, a result of measuring r20D1 without pp might look like this (the result is rendered by perf annotate):

Because r20D1 measures the number of "Retired load instructions missed L3", it can never happen on instructions other than the ones accessing memory addresses.

However this result shows that 2.32% of them occured in a sub between two registers, 9.21% in a mov between two registers, etc etc.

(An excuse for this is that, for performance analysis in function-level, this accuracy might be enough.

Even if some events are drifted by a few instructions, if you look at them in function-level granularity the outcome can be the same.)

For the explanation of each counter, you can refer section 19 of the volume 3 of the super thick manual from Intel.

Note that the event number and the umask have to be specified to perf in the reversed order of how they appear in the manual.

For example if you measure a counter whose event number is AA and the umask is BB, you have to do perf record -e rBBAA (not rAABB).

Using PEBS by specifying :pp for the same workload gets a result like this:

Now you can see that no r20D1 occurs on any instructions without memory accesses.

Another huge advantage of PEBS is it supports retrieving the register values, the instruction pointer, the memory address accessed, and the source of data at the time the instruction triggering the event occurs. However explaining these requires a whole new long post so I just leave it to another manual from Intel.

How PEBS is handled in the kernel

The Linux kernel holds a list of counters that support PEBS, because not all counters support PEBS so the kernel has to know which ones are PEBS-capable. For Skylake and Kabylake, PEBS is supported for the counters which have "PS" or "PSDLA" in the comment column of the manual. For Broadwell or older CPUs the manual says "Supports PEBS" in the comment column for PEBS-capable counters.

This list is defined in arch/x86/kernel/cpu/perf_event_intel_ds.c in kernel 3.16 and arch/x86/events/intel/ds.c in kernel 4.9.

The problem is the list in kernel 3.16 at the time Debian 8 was released was not complete.

For a concrete example, r20D1 (event number=0xD1, umask=0x20) used in the above example is PEBS-capable, but it is not listed in linux-source-3.16 of Debian 8.

(Note that it is listed in the newest version of kernel 3.16 in kernel.org, which means it was fixed at some point after Debian 8 was released.)

In perf_event_intel_ds.c from linux-source-3.16 package of Debian 8, the list is defined as follows:

struct event_constraint intel_hsw_pebs_event_constraints[] = { INTEL_UEVENT_CONSTRAINT(0x01c0, 0x2), /* INST_RETIRED.PRECDIST */ INTEL_PST_HSW_CONSTRAINT(0x01c2, 0xf), /* UOPS_RETIRED.ALL */ INTEL_UEVENT_CONSTRAINT(0x02c2, 0xf), /* UOPS_RETIRED.RETIRE_SLOTS */ INTEL_EVENT_CONSTRAINT(0xc4, 0xf), /* BR_INST_RETIRED.* */ INTEL_UEVENT_CONSTRAINT(0x01c5, 0xf), /* BR_MISP_RETIRED.CONDITIONAL */ INTEL_UEVENT_CONSTRAINT(0x04c5, 0xf), /* BR_MISP_RETIRED.ALL_BRANCHES */ INTEL_UEVENT_CONSTRAINT(0x20c5, 0xf), /* BR_MISP_RETIRED.NEAR_TAKEN */ INTEL_PLD_CONSTRAINT(0x01cd, 0x8), /* MEM_TRANS_RETIRED.* */ /* MEM_UOPS_RETIRED.STLB_MISS_LOADS */ INTEL_UEVENT_CONSTRAINT(0x11d0, 0xf), /* MEM_UOPS_RETIRED.STLB_MISS_STORES */ INTEL_UEVENT_CONSTRAINT(0x12d0, 0xf), INTEL_UEVENT_CONSTRAINT(0x21d0, 0xf), /* MEM_UOPS_RETIRED.LOCK_LOADS */ INTEL_UEVENT_CONSTRAINT(0x41d0, 0xf), /* MEM_UOPS_RETIRED.SPLIT_LOADS */ /* MEM_UOPS_RETIRED.SPLIT_STORES */ INTEL_UEVENT_CONSTRAINT(0x42d0, 0xf), INTEL_UEVENT_CONSTRAINT(0x81d0, 0xf), /* MEM_UOPS_RETIRED.ALL_LOADS */ INTEL_PST_HSW_CONSTRAINT(0x82d0, 0xf), /* MEM_UOPS_RETIRED.ALL_STORES */ INTEL_UEVENT_CONSTRAINT(0x01d1, 0xf), /* MEM_LOAD_UOPS_RETIRED.L1_HIT */ INTEL_UEVENT_CONSTRAINT(0x02d1, 0xf), /* MEM_LOAD_UOPS_RETIRED.L2_HIT */ INTEL_UEVENT_CONSTRAINT(0x04d1, 0xf), /* MEM_LOAD_UOPS_RETIRED.L3_HIT */ /* MEM_LOAD_UOPS_RETIRED.HIT_LFB */ INTEL_UEVENT_CONSTRAINT(0x40d1, 0xf), /* MEM_LOAD_UOPS_LLC_HIT_RETIRED.XSNP_MISS */ INTEL_UEVENT_CONSTRAINT(0x01d2, 0xf), /* MEM_LOAD_UOPS_LLC_HIT_RETIRED.XSNP_HIT */ INTEL_UEVENT_CONSTRAINT(0x02d2, 0xf), /* MEM_LOAD_UOPS_LLC_MISS_RETIRED.LOCAL_DRAM */ INTEL_UEVENT_CONSTRAINT(0x01d3, 0xf), INTEL_UEVENT_CONSTRAINT(0x04c8, 0xf), /* HLE_RETIRED.Abort */ INTEL_UEVENT_CONSTRAINT(0x04c9, 0xf), /* RTM_RETIRED.Abort */ EVENT_CONSTRAINT_END };

I don't explain what each INTEL_* macro means, but the point here is the kernel defines a counter rXXYY is PEBS-capable if there's a line like INTEL_SOMETHING_CONSTRAINT(0xXXYY, 0xf).

You can see there are r01D1, r02D1, r04D1, and r40D1, but no r20D1, even though r20D1 is described to be PEBS-capable in Haswell in the intel manual.

Note that Haswell was the latest core generation at the time of kernel 3.16 release, and for newer versions of CPUs such as Skylake the linux kernel just treats them as Haswell.

Therefore, if you try to measure r20D1:pp in Debian 8, it yields an error:

$ perf record -e r20D1:pp -- workload 'precise' request may not be supported. Try removing 'p' modifier.

This issue has been already fixed in kernel 4.9.

Therefore Debian 9 that uses kernel 4.9 can properly handle r20D1 as PEBS-capable and it allows perf to measure r20D1:pp.

The Linux kernel 4.9 defines the list of PEBS-supported counters in arch/x86/events/intel/ds.c (only the relavant part is extracted):

struct event_constraint intel_hsw_pebs_event_constraints[] = { ... INTEL_FLAGS_EVENT_CONSTRAINT_DATALA_XLD(0xd1, 0xf), /* MEM_LOAD_UOPS_RETIRED.* */ ... }

This macro specifies that any counters ending with D1 are PEBS-capable.

Summary

If you use special hadware functionalities such as PEBS, I do recommend to upgrade your distro and the kernel.

PEBS has existed since Pentium 4, but the supported counters are ever growing and changing (actually r20D1 was the number of micro operations until Broadwell, but it was changed to the number of instructions since Skylake).

So you'd better use a near-latest kernel as long as you can to get a proper support, and using the latest distro might be an easy way to go.

A newbees' guide for CPU architecture names

The aim of this post

This post aims to be a help for computer newbees, or those who are working in the application layer but somehow have to buy a new server and install Linux on it, but are confused by those complex naming of CPU architectures (i386, x86, IA64, ...).

Note that this post shows which CPU "instruction set" architectures are compatible/different, but it does not step into how each "micro" architectures are different (e.g. Skylake vs Broadwell).

At a glance

If more than 1 name is shown in the "Arch Name" column, you can expect they are all the same. If you're interested in why a single instruction set architecture has several names, you can refer the next section.

| Arch Name | Description | Examples |

|---|---|---|

| x64, x86_64, AMD64, EM64T, Intel 64 | So called "64 bit CPUs". Note that AMD64 (not IA64) is in this group. Software for AMD64 work on your 64 bit CPUs from Intel, but software for IA64 don't. Note also IA64 and Intel 64 are different. | Core i3/5/7, Xeon E3/5/7, Core 2 Duo, Opteron, Phenom, Athlon 64, ... |

| x86, i386, IA32 | So called "32 bit CPUs". Normally you never buy a new machine in this group, but old servers might still use it. | Pentium 2/3/4/D *1, Celeron M/D, Athlon *2, Duron, ... |

| IA64 | Another 64 bit architecture from Intel, but never became popular. Don't use it unless you're 100% sure what you're doing. IA64 is 0% compatible with x86 or x64. | Itanium, Itanium2 |

| Power (IBM) | Currently the only way to use NVLink between the CPU memory and the GPU memory. You might want it if you do deep learning with ~100GB of data. Not sure if it's a good investment as Intel should get NVLink compatibility sooner or later. | Power 7, ... |

| ARM | Currently used only for embedded and mobile devices. Never care them for now if you live in the app layer (might become different in few years though). | Cortex-A9, ... |

| MIPS | Ditto. | don't really know |

*1: Pentium and Celeron brandings are bit confusing. As the names were reused after 64 bit-ization, there are also 64 bit versions of Pentium and Celeron.

*2: Althon is even worse because the original 32 bit version was "Athlon", then 64 bit versions named "Athlon 64" were released, after that AMD removed "64" from later 64 bit versions and they're named just new "Athlon" (like iPad -> iPad3 -> the new iPad).

The history

OK, so why a single instruction set architecture has many names? Here's the history.

x86, i386, IA32

They originate from old Intel CPU series: 80386, 80486, 80586, 80686, etc. 80386 was the first 32 bit CPU from Intel, and the successive ones were named 80486, 80586, 80686, ..., so from some point they started to be called x86 (x can refer 3, 4, 5, 6, ...). The instruction set architecture used in 80386 was called i386 ("i" from "i"nstruction), but people refer the architecture (i386) and its implementations (x86) interchangably. i386 is also called IA32, meaning "I"ntel "A"rchitecture with "32" bit addressing.

The reason why (relatively) recent CPUs such as Pentium 4 are also called x86, is that they are still backward compatible with 80386. This means that any instructions supported in 80386 are supported in Pentium 4, which does not necessarily mean any old programs written for old computers work in recent ones. For old software to run on newer machines, they require OS- or compiler- level ABI/API compatibilities. However the compatibilities (especially OS-level ones) are kept very carefully, because for computers to sell the most important thing is if they have lots of applications to go (that's one of the reasons IA64 could not become popular, that's why Windows phones never pop into your choices).

x64, x86_64, AMD64, EM64T, Intel 64

They are extended versions of x86, which can handle 64 bit address spaces (meaning that they can utilize more memory natively) and have other advantages as well. In this meaning they are called x86_64, also x64 for short. A confusing point is x64 is newer than x86 although the number is smaller. You may go crazy if I tell you about x32, which is a concept newer than x64. It's like a mixture (combining good things) of 32 and 64 bit modes, but normally you still don't see it often now.

Now why this is also called AMD64, is that AMD was the first (major) vendor to extend x86 into the 64 bit mode. After that Intel created EM64T, which is compatible to AMD64. This was a big deal because AMD was/is a vendor creating CPUs compatible to Intel architectures, but for this thing AMD was a step ahead of Intel. Today some people say Radeon Instinct, a GPU for deep learning which AMD claims faster than Titan X, is a big deal from AMD after 10 years; the one 10 years ago is this AMD64. :)

Wait, but why didn't Intel name it IA64 instead of EM64T?? That's because at that time IA64 already existed, and it was a brand-new 64 bit architecture that had no compatibility with x86. Intel's first aim was to replace old x86 by IA64 (the actual implementation was named Itanium), but for many reasons it failed. What is really confusing, is after several years Intel gave a new name to EM64T, that is Intel 64!

So due to the very complex history shown here, now x64, x86_64, AMD64, EM64T and Intel 64 all refer the same instruction set architecture. *3

*3: There are a lot of differences in fact, especially in additional functionalities such as Intel VT or SSE, but that's not the topic of this post.

Installing Debian GNU/Linux 8 (Jessie) into Thinkpad x260

I got a thinkpad x260 and installed Debian GNU/Linux 8 (which I also use for my desktop and servers).

Here are some tips for someone (or no one?) who wants to do the same.

Base Installation

I basically followed normal operations. The point is to shrink C: using Windows tools and never let the Debian installer modify existing partitions. In this manner you can greatly reduce the possibility of committing a serious mistake.

- Create a recovery medium using one of the Windows official tools. Type "recovery" in the start menu and you'll find it.

- Delete the recovery partition using the "management tool" you can find in the "control panel". Be careful: by doing this you lose the way to create a recovery medium again. Make sure the one you just created works perfectly.

- Shrink the existing partition for C: with the same tool.

- Disable "security boot" functionality from the BIOS menu.

- Create a bootable USB of Debian and install it. Choose "guided partitioning" and "available free space" when selecting where to install. Never do partitioning manually unless you're really sure what you are doing.

Devices

Wifi

Unfortunately the wifi inside x260 (Intel Wireless 8260) does not work with the linux kernel included in Debian 8. I upgraded the kernel to the latest stable (4.9) but then X got some error and did not work (the same actually happens with my desktop so it might be a problem between X and the latest kernel). For those who want to use a self-built kernel, an official guide from Debian is the most easy-to-follow.

Instead I just use WLI-UC-GNM2 from Buffalo that was sleeping in my desk.

To make it work I had to add contrib and non-free repos to /etc/apt/sources.list and installed firmware-ralink package.

After that it works perfectly with no command-line settings.

Monitor

It works with the maximum resolution (1920 x 1080). Have never tried the HDMI port though. The brightness control buttons in the keyboard do not work in the default setting (I use MATE as my desktop environment).

Track point and touch pad

They both work perfectly. Scrolling with two fingers on the touch pad also works.

Sound

Speaker and mic both work. The volume control buttons in the keyboard also work.

Performance

Battery

Currently it works normally, I mean I don't feel battery consumption is reasonably larger than Windows. Note: Having less battery life in Linux can actually happen because ACPI-related stuff is one of the most troublesome thing to support correctly; that's why hibernation never works in Linux. :p

CPU

Haven't yet tested neatly.

Memory

I think the weakest part of Thinkpad X260 is the memory bandwidth. This machine only has 1 memory slot thus only 1 memory channel is usable (although the CPU has two channels). If you wanna do some big-data stuff or machine learning, I recommend you to buy T or X1 series (or something from other vendors) that have at least two memory slots.

Other comments

Intel wifi works with Stretch (testing version)

As Intel Wireless 8260 is supported from kernel 4.1, it works with no hurdle with Debian 9 (Stretch, a.k.a testing, next stable).

Note that you still have to install a non-free binary firmware (firmware-iwlwifi package from non-free repo).

A drawback of installing the testing release (other than the fact security updates are not so often provided) might be the gcc version, which is 6.3 and can be too new for those who have ultra legacy codes written for gcc 3.x or even 2.x.

Something strange with Windows

When I was installing an anti-virus software into Windows 10 (not Linux), it seems like some of the input signals were dropped. Altough the load was extremely high for all components (CPU, mem IO, disk IO) at that time, this was I guess bit strange. What happened was during the installation the mouse cursor got extremely heavy, and it's not just delayed but some signals were definitely ignored, like a click of mouse was successful only with 75% of possibility.

I don't know if it is due to the hardware or a bug of Windows 10. It might be the case that Windows ignore some signals on purpose??? (It's not related to Debian, but this is very first time for me to see such a phenomenon so I put it here). One thing true is this has never happened so far in Linux, even with a very high load for example a kernel re-compilation.

Psuedo Type Checking in C using Struct

Requirement

Let the C compiler recognize two types different, even when the two are actually equivalent in terms of the size and contents.

Idea

- Wrap each type in a

structto add type information, as a compiler recognizes two structs (even with the same size) as different. - Do not actually define dummy structs, but use pointers to them to:

- avoid meaningless coding

- expect that the types are stored in registers for speedup

Example

typedef struct A1* a1; typedef struct A2* a2; // a function that accepts type a1 only void f(a1 p){ } // a function that accepts type a2 only void g(a2 p){ } a1 make_a1(int n){ return (a1)(unsigned long)n; } a2 make_a2(int n){ return (a2)(unsigned long)n; } main(){ a1 p1 = make_a1(0); a2 p2 = make_a2(1); f(p1); g(p2); }

The code above compiles with no relevant warnings.

Actual definitions of A1 and A2 are not needed because creating a pointer to a struct does not require the actual definition of the struct (otherwise, recursive data structures such as linked list cannot be written).

However, once the arguments of f and g are flipped by mistake (like f(p2) and g(p1)), you get warnings:

typecheck.c: In function 'main': typecheck.c:20:3: warning: passing argument 1 of 'f' from incompatible pointer type [enabled by default] f(p2); ^ typecheck.c:4:6: note: expected 'a1' but argument is of type 'a2' void f(a1 p){ } ^ typecheck.c:21:3: warning: passing argument 1 of 'g' from incompatible pointer type [enabled by default] g(p1); ^ typecheck.c:6:6: note: expected 'a2' but argument is of type 'a1' void g(a2 p){ } ^

An disadvantage of this method compared to actually defining wrapper structs is since there are no definitions of A1 and A2 people reading the code can be confused (I actually was when analyzing QEMU's source code, and I learned this trick from one of the ML entries).

Follow-up (Feb 2017)

This might be cleaner. The difference is that this version does not need never-used names of the structs, but instead it just defines empty structs.

typedef struct {}* a1; // No longer need the name A1 typedef struct {}* a2; // No longer need the name A2 void f(a1 p){ } void g(a2 p){ } a1 make_a1(int n){ return (a1)(unsigned long)n; } a2 make_a2(int n){ return (a2)(unsigned long)n; } main(){ a1 p1 = make_a1(0); a2 p2 = make_a2(1); f(p1); g(p2); }

LaTeX and PDF Tips

LaTeX and PDF Tips

From the access logs it's obvious that the latex and pdf tips page (in Japanese) is one of the most popular contents besides my profile and seems like it's somewhat highly page-ranked by google, so I put here an English translation.

Equalize the Column Heights of the Last Page

flushend.sty is the most convienient way to equalize the heights of the last page of a 2-column pdf. Download it to the same directory as your .tex and do

\usepackage{flushend}

It's useful especially when you submit a paper to an IEEE conference (whose latex style shows you how to equalize the heights with super troublesome way).

Concatenate Two PDFs

pdftk command lets you concatenate two PDFs such as an abstract and a poster draft. Do as follows:

$ pdftk input_filenames output output_filename

Note that output is a command option, which you should put as-is.

Create a PDF with the US Letter Size

If you want to set the size of your PDF to US Letter (also referred as simply 'Letter' size)

but you don't know how to tweak the style file,

the paper option of dvipdfm (or dvipdfmx for CJK people) can help you.

$ dvipdfm -p letter input.dvi

Citations per Article in ACM Digital Library

The avarge citations per article in ACM digital library.

- Microsoft Research: 34.20 source

- Google: 27.13 source

- Argonne National Lab: 17.25 source

- Harvard: 17.07 source

- National University of Singapore: 11.00 source

- Tsinghua University: 7.63 source

- University of Tokyo: 7.32 source

- AIST: 4.75 source

Note: the numbers may be biased due to the difference of main research area of each institution and many other factors, so comparing small differences like by several points can be irrelevant.